Run demo¶

Locally¶

We assume that you have already installed and properly configured python, latest pip, latest setuptools and docker that has access to pull images from the DockerHub. If something is missing or should be updated refer to the Installation or Troubleshooting sections.

Install cwl-airflow

$ pip install cwl-airflow==1.0.16 --find-links https://michael-kotliar.github.io/cwl-airflow-wheels/ # --user

--user- explained in Installation sectionInit configuration

$ cwl-airflow init

Run demo

$ cwl-airflow demo --auto

For every submitted workflow you will get the following information

CWL-Airflow demo mode Process demo workflow 1/3 Load workflow - workflow: # path from where we load the workflow file - job: # path from where we load the input parameters file - uid: # unique identifier for the submitted job Save job file as - # path where we save submitted job for CWL-Airflow to run

uid- the unique identifier used for DAG ID and output folder name generation.When all demo wokrflows are submitted the program will provide you with the link for Airflow web interface (by default it is accessible from your localhost:8080). It may take some time (usually less then half a minute) for Airflow web interface to load and display all the data.

On completion the workflow results will be saved in the current folder.

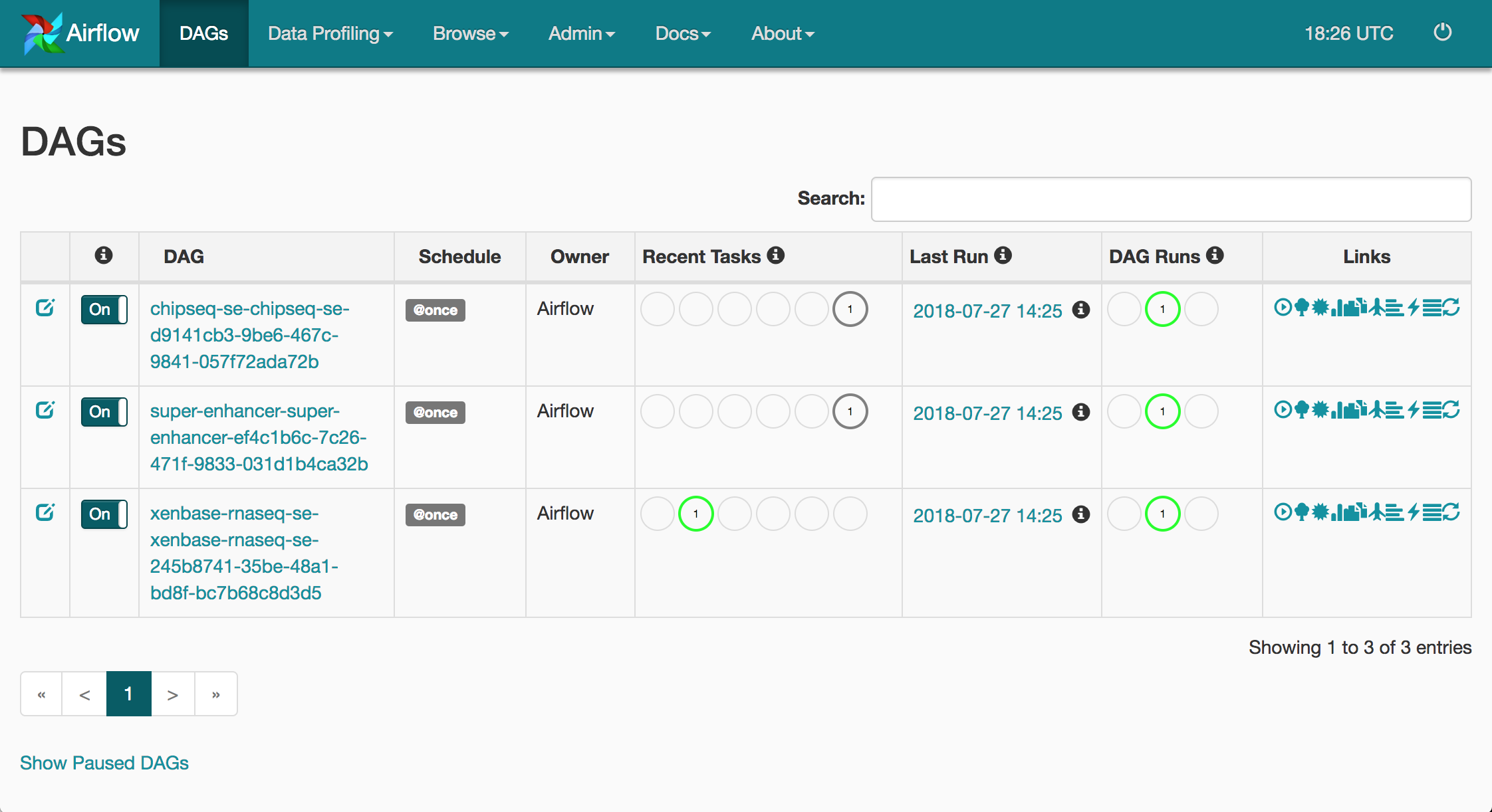

Airflow web interface

Airflow web interface

VirtualBox¶

In order to run CWL-Airflow virtual machine you have to install Vagrant and VirtualBox. The host machine should have access to the Internet, at least 8 CPUs and 16 GB of RAM.

Clone CWL-Airflow repository

$ git clone https://github.com/Barski-lab/cwl-airflow

Chose one of three possible configurations to run

Single node

$ cd ./cwl-airflow/vagrant/local_executorCelery Cluster of 3 nodes (default queue)

$ cd ./cwl-airflow/vagrant/celery_executor/default_queueCelery Cluster of 4 nodes (default + advanced queues)

$ cd ./cwl-airflow/vagrant/celery_executor/custom_queueStart virtual machine

$ vagrant up

Vagrant will pull the latest virtual machine image (about 3.57 GB) from Vagrant Cloud. When started the following folders will be created on the host machine in the current directory.

├── .vagrant └── airflow ├── dags │ └── cwl_dag.py # creates DAGs from CWLs ├── demo │ ├── cwl │ │ ├── subworkflows │ │ ├── tools │ │ └── workflows # demo workflows │ │ ├── chipseq-se.cwl │ │ ├── super-enhancer.cwl │ │ └── xenbase-rnaseq-se.cwl │ ├── data # input data for demo workflows │ └── job # sample job files for demo workflows │ ├── chipseq-se.json │ ├── super-enhancer.json │ └── xenbase-rnaseq-se.json ├── jobs # folder for submitted job files ├── results # folder for workflow outputs └── temp # folder for temporary dataConnect to running virtual machine through

ssh$ vagrant ssh master

Submit all demo workflows for execution

$ cd /home/vagrant/airflow/results $ cwl-airflow demo --manualFor every submitted workflow you will get the following information

CWL-Airflow demo mode Process demo workflow 1/3 Load workflow - workflow: # path from where we load the workflow file - job: # path from where we load the input parameters file - uid: # unique identifier for the submitted job Save job file as - # path where we save submitted job for CWL-Airflow to run

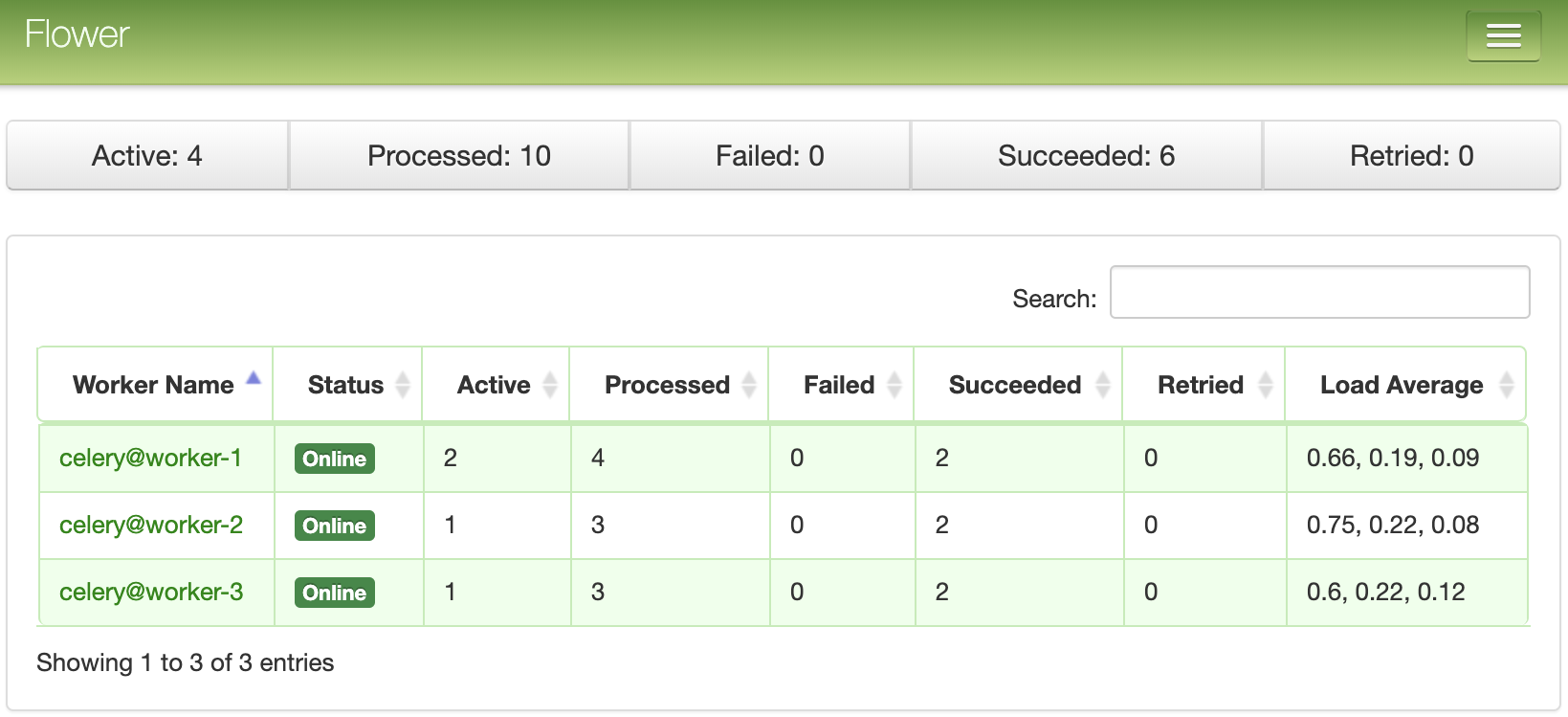

uid- the unique identifier used for DAG ID and output folder name generation.Open Airflow web interface (localhost:8080) and, if multi-node configuration is run, Celery Flower Monitoring Tool (localhost:5555). It might take up to 20 seconds for Airflow web interface to display all newly added workflows.

On completion, you can view workflow execution results in the

/home/vagrant/airflow/resultsfolder of the Virtual Machine or in./airflow/resultsfolder on your host machine.Stop

sshconnection to the virtual machine by pressingctlr+Dand then run one of the following commands$ vagrant halt # stop virtual machinesor

$ vagrant destroy # remove virtual machines $ rm -rf ./airflow .vagrant # remove created folders

Dashboard of the Celery monitoring tool Flower

Dashboard of the Celery monitoring tool Flower